Shop by Category

Shop by Brand

Shop by Brand

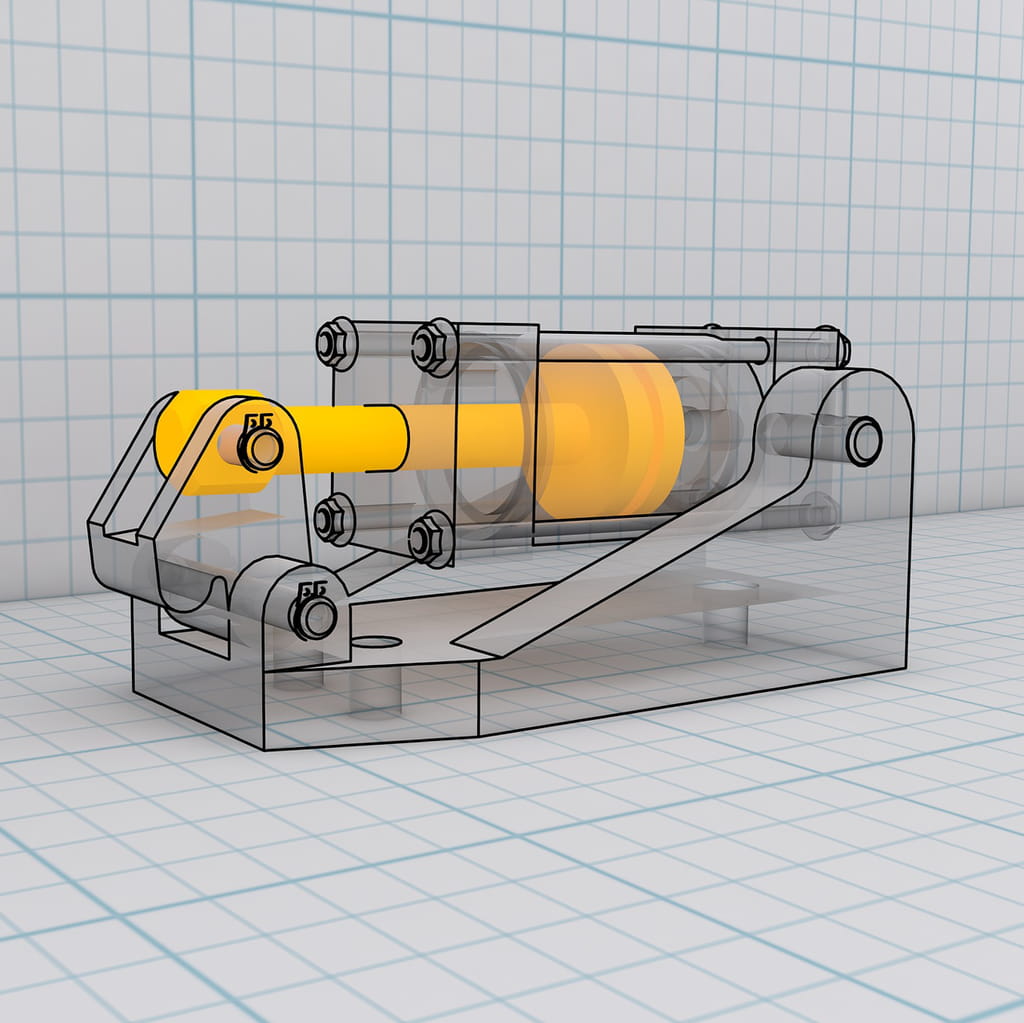

High-End Workstation PC

CUSTOM BUILT HIGH-END COMPUTING PC DESKTOP WORKSTATIONS | COMPLEX MODELLING | CAD & CAM | AUDIO & VIDEO PRODUCTION | AI & DEEP MACHINE LEARNING

Experience unparalleled computing power with Precision's Custom Built High-End PC Workstations, meticulously crafted for demanding tasks such as complex modelling, CAD/CAM, audio and video production, and AI and deep machine learning. Based in Brisbane, with over 30 years of experience since 1993, Precision Computers is your trusted partner for high-performance computing solutions. Our workstations are designed to your specifications and can be shipped Australia-wide. We also offer flexible finance options to ensure accessibility for all users.

Our High-End PC Workstations are engineered with cutting-edge features:

- Powerful Processors: Featuring AMD Ryzen™ 9 Processors & Intel® Core™ i9 processors for fast, reliable, and efficient rendering and encoding speeds.

- Workstation Motherboards: Designed to withstand the stress and power of high-end components, ensuring premium quality and reliability.

- High-Speed RAM: Utilising high-speed RAM with suitable CAS Latency to maximize processing speeds and reliability.

- NVMe® Storage: Utilising high-performance NVMe storage for accelerated data transfer between client systems and storage drives.

- Professional Video Cards (GPU): Incorporating NVIDIA RTX™ & NVIDIA ® Quadro ® for longevity, high speeds, and premium quality in 3D design, film, and video production.

- Efficient Cooling: Designed with liquid cooling to efficiently distribute heat, resulting in better performance and longer lifespan.

- Platinum Power Supply: Provides extremely tight voltage regulation, reducing power consumption, and cooling loads, and improving power quality.

Investing in one of our High-End PC Workstations offers numerous benefits, including:

- Unmatched Performance: Experience top-tier performance and speed, whether for work or play.

- Long Warranty: Enjoy peace of mind with our generous warranty options.

- Expert Support: Access our dedicated technical support team for assistance when you need it.

- Customisation: Tailor your PC to your exact needs, ensuring you get the most out of your investment.

- Future-Ready: Our PCs are designed with future upgrades in mind, extending the life of your system.

Precision High-End PC Workstations are ideal for a wide range of users:

- Professionals: Engineers, graphic designers, video and animation creators, and deep learning enthusiasts.

- Architects: Creating 2D designs, 3D models, and immersive visualisations in real time.

- Virtual Reality Enthusiasts: Those seeking the most immersive VR experiences.

- Product Designers: Handling complex projects involving numerous assemblies and simulations.

- Video Editors: Speeding up video editing workflows, especially for high-resolution media and special effects.

- Artists and Animators: Working with massive scenes and complex visual effects.

|

|

|

Custom building is our strength. Browse our extensive range of High-End Workstation Desktop PCs below: